How to advertise for funds off-the-page. Part 2

Papworth’s principles of press advertising. Part 2: Test, test and test again.

- Written by

- Andrew Papworth

- Added

- May 18, 2014

It is always important to have a banker advertisement that you know you can rely on. This is an advertisement that has proved itself over time to be consistently reliable. But that alone is not enough. Your banker advertisement will inevitably start to decline at some point, even to become ineffective.

Threat of wear-out

It is always important to have a banker advertisement that you know you can rely on. This is an advertisement that has proved itself over time to be consistently reliable. But that alone is not enough. Your banker advertisement will inevitably start to decline at some point, even to become ineffective. This is known as ‘wear-out’ –and it can happen with alarming suddenness. So that you’re not at a disadvantage when this happens, you need continuously to try to find other advertisements – including other treatments of the same strategy and radically different strategies – that are as good as if not better than the banker.

Attention to detail

You also need to test constantly the details of the business end of the banker advertisement (i.e. any coupon or calls to action) in a continuing effort to make improvements to its performance. It is essential to keep an open mind about the potential value of various response techniques. What works for one charity may not work so well for another. Some techniques may be particularly effective for some target audiences but not for others. The only way to be sure about what works best for you is to carry out a carefully planned and highly disciplined programme of testing in which each element – no matter how small – is tested with a sufficiently large sample to be significant. But a test is never conclusive for all time. The testing programme needs to be continuous so that previous findings can be validated after a decent interval as circumstances change.

Some examples of the elements which need testing systemically and one by one are:

With and without coupon.

- Use of a free or prepaid postal address.Use of free telephone number.

- Use of text, e-mail, web address, etc.

- One-off donation v. regular direct debit v. both.

- Methods of payment.

- Layout of forms to make them user-friendly.

- Personalisation of reply address.

- Use of incentives, offers, feedback, etc.

- The range and order of suggested donations.

The importance of measurement criteria

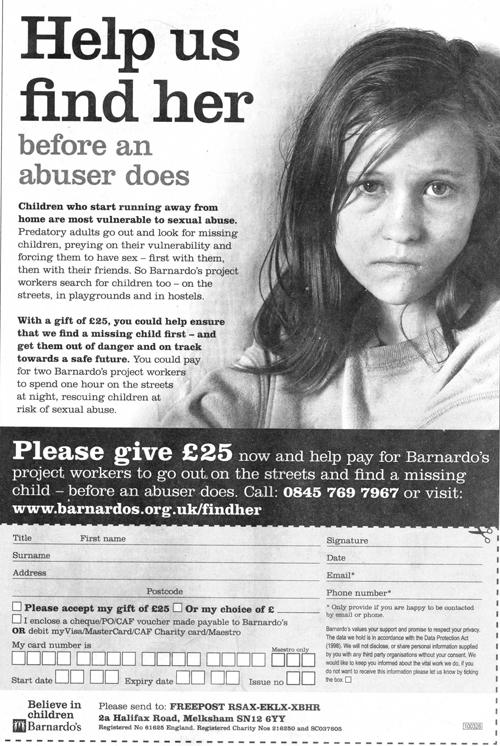

There are only two important criteria by which to evaluate fundraising print items: their long-term return on investment and their impact on the brand’s reputation and image. When considering the impact on reputation and image it is essential to bear in mind that – as pointed out in part 1 – the people who actually respond as donors, sponsors, members, or enquirers are always a tiny proportion of those who will have seen the item and a quite small proportion even of those who have read it. Almost by definition, therefore, the actual respondents will be atypical. This is important when considering a challenging or contentious approach. An approach that works for the ultra-committed and the already well-informed – and may therefore deliver a good return on investment – could easily damage the brand by antagonising the wider audience who see or read it but do nothing except seethe and make a mental note never to support you.

Measuring return on investment is by no means straightforward. It’s not just a matter of taking the cost of producing the advertisement and buying the space in the publication and dividing them by the cash coming in. It clearly needs to take account of the projected lifetime income from the respondent and the cost of looking after that supporter throughout that lifetime. Care needs to be exercised in taking into account the creative or design costs because the creative costs for a successful execution with a long life can be written off over that time, whereas all the creative costs of a brave failure will be horrendous.

Making sense of tests

It is important to guard against testing the elements of fundraising ads sequentially – e.g. publishing one version of an advertisement and then another with a variation some time later. For instance, imagine you want to decide on the value of providing a free or prepaid return postal address. Suppose you run your banker advertisement with a free address one week and the identical advertisement without it some weeks later. The first ad gets double the response of the second. Can you conclude that offering a free address is vindicated? No, of course not. It is impossible to separate the effects of, for instance, wear-out, seasonality and order of appearance from the impact of the variation under test.

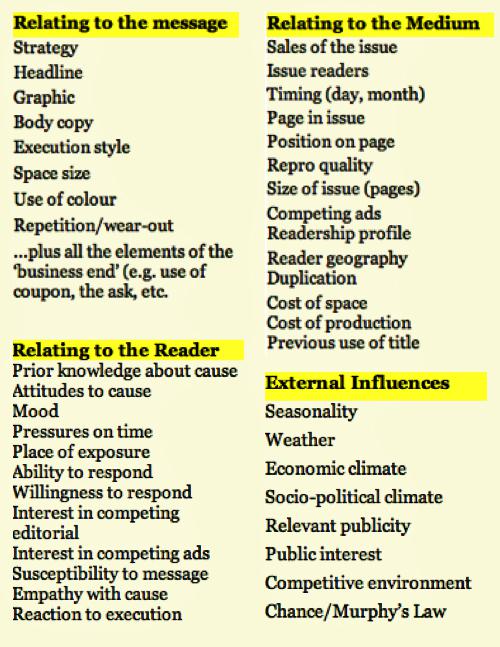

In fact, it is sobering to consider some of the factors that can combine to determine the response and return on investment to a particular insertion of a press advertisement. The list in Table 1 is probably not exhaustive.

Making sense of the differences

By and large, only the factors in the column headed ‘relating to your message’ are controllable and all of the other factors are, by no means, either predictable or even easily recorded or measured after the event. Consider two ads appearing in the same Sunday newspaper in consecutive weeks. The weather on the day of the first insertion is appalling so everybody stays in and spends hours reading the papers. The next week the sun is shining and everybody leaves early to drive to the beach. To complicate things further this is the pattern in the south of the paper’s distribution area whilst in the north the picture is reversed. Or consider an ad appearing on a day when the lead story is about major scandals in charity administration costs. Unless you keep meticulous records of everything that could affect response – and to do so is impracticable –conclusions are likely to be dubious.

In theory, sense can be made of the differences if there are quite large numbers of identical advertisements to allow examination of meaningful averages, but very few charities generate sufficient cases to make this statistically viable. It is also a very slow process with a long time lag between test and results involving high investment in unproven test ads to accumulate sufficient data.

There are statistical techniques such as Latin squares (see the example in Table 3 in which three ads are rotated in three publications) which can go some way to equalise smaller tests by spreading the order effects and any publication variations. It’s not a perfect solution but taking the average results of the ads in each of the three columns is better than merely looking at sequential results.

Regional split runs – whereby, say, the banker advertisement appears in some regional editions of a paper and the test variant in the remaining editions - have been used but these can be dangerous unless you are absolutely sure that demographic, lifestyle and economic variations in the regions chosen will not confuse the interpretation of results – unless you run the test several times reversing the order and then average the results.

Far and away the best and most reliable solution is to carry out exactly matched split runs where the test advertisement is interleaved alternately with the banker advertisement throughout the print run of a publication. Then you can be pretty sure that any difference in response is down to the ad content (but see opposite about Murphy’s Law*). This can be particularly valuable for testing the impact of single detailed changes to the banker advertisement because all the factors are held constant except the one difference under test. However, not all publications are keen on offering this facility and often – as well as paying a premium for it – you may well suffer in the position in which your advertisement appears. You could find it on a page read by very few people.

*Murphy’s Law (attributed to a very wise Irishman) says that it always pays to believe that ‘if anything can go wrong, it undoubtedly will’.

A proven solution

Carrying out a split run test is, however, particularly easy and effective when using loose inserts. It has proved possible, on long print runs, to interleave as many as six different executions or variants. Even if the overall performance of a particular insert on a given day is below average, the relative performance of any variant versus the banker is still indicative. If necessary a loose insert can take the form of a facsimile of a newspaper or magazine advertisement to add verisimilitude. Not least among the benefits of this method is that conclusions can be reached quickly and investment in ineffective variants can be strictly limited.

However, it is not a panacea. Calibration tests with identical copy in A and B split runs have been known to show as much as ±10 per cent random variation in response on occasion (which is another reason why Murphy’s Law is included in the list of external factors). This makes it unwise to rely on just one test before rolling out a finding into general use. It’s also worth remembering that things change over time and even if a particular technique was validated a few years ago it doesn’t mean that it still would be today. No doubt it paid to include fax numbers once upon a time.

But there is more to running press fundraising campaigns than finding a good banker advertisement and constantly improving it by judicious testing; there is also the allocation of budgets to various advertisements. The ‘high assay method’ – a term adopted from mining and drilling for oil – is a foolproof strategy by which returns on investment from fundraising advertisements and inserts can be optimised. It involves pouring investment into the most productive source up to the point when it becomes uneconomic.

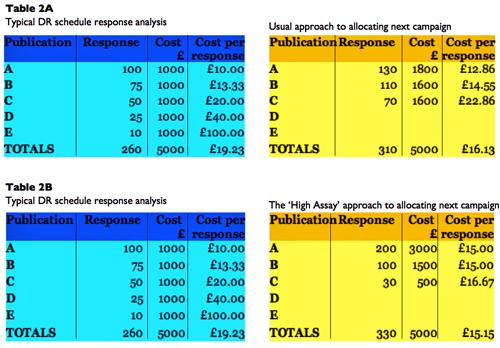

Tables 2A and 2B show the principles of the high assay method in simplified form.

In Table 2A the blue columns show schematically a typical response analysis resulting from a direct response campaign using five publications. It shows a couple of publications doing well (A and B); another couple, not so good (C and D) and one utter failure (E). The yellow columns in Table 2A show that typically, when planning the next campaign based on those results the two least cost-effective would be dropped and the money saved would be spread around the remaining three. Because there tend to be diminishing returns from increasing the investment in a publication, the cost per reply goes up in each of the publications left on the schedule.

Nevertheless, because the least cost-effective titles have been dropped, the overall cost per reply improves dramatically and everyone congratulates themselves. However, there is danger in this strategy that the following year another title will under-perform and that you’ll eventually run out of acceptable titles. A variant is to use some of the money saved by knocking off publications D and E to test other publications with similar readership profiles to A, B or C. But this is also a high-risk procedure because the new publications will be unproven.

The high assay approach is illustrated in Table 2B. It says that you should pour more money into your best publication until diminishing returns start to make it uneconomic. This can mean increasing the space size or the frequency of insertion or both.

Media planners are often reluctant to do this because it is counter-intuitive to reduce the cost-effectiveness of your best publication intentionally. But, as the yellow column in Table 2B shows, even though the costs per response of publications A and B rise, the cost effectiveness of C improves dramatically because of reduced usage resulting in a better overall cost per reply for the new campaign. It does mean that because of the increased expenditure there is a risk that publication A will wear out sooner or later so it is vital to set aside a budget for identifying and testing viable alternative publications.

The essence of the high assay method is not to be frightened of backing success and to accept diminishing returns up to the point that it becomes uneconomic. Of course, in the real world all this can by turned upside-down by good media buying – almost any publication can become cost-effective at the right price and the threat of being dropped from the schedule can sometimes concentrate publishers’ minds wonderfully. It is also essential to search constantly for untried publications that might work and to test various ad shapes and sizes, positions in the publications and different days of the week in newspapers, seasons, frequency of insertions, closeness of insertions, etc.

And finally...

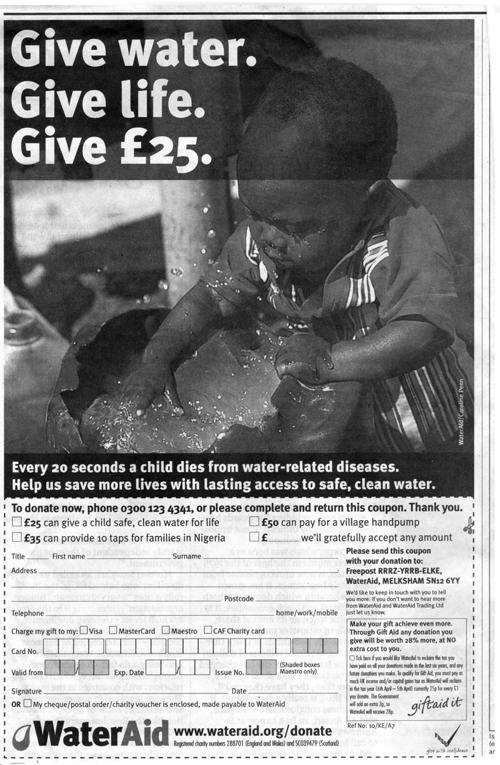

Using these techniques it is possible to ensure that your fundraising press campaigns are constantly optimised. These methods mostly apply equally to loose inserts and direct mail. The important thing is never to believe that you’ve cracked it. You must keep an open mind, question absolutely everything and never jump to conclusions about what is working and what isn’t until you’ve seen it conclusively and rigorously proved. Most of all don’t fall for the folklore that surrounds fundraising advertising. For instance, ‘everybody knows’ that it is important to be single-minded and that giving respondents choices lowers response. Well, don’t you believe it until you’ve tried it. It is indeed extremely important that the main proposition should be single-minded but there is no reason why the call to action shouldn’t give options. Take the example of the WaterAid advertisements shown earlier with their different propositions for a press advertisement and a loose insert. They both work perfectly well but it is intriguing to wonder what the return on investment would have been if the loose insert‘s response form had offered the option of a £25 one-off gift and the advertisement’s coupon – perhaps larger – had contained a regular direct debit mandate.

Part 1 of this article, on how fundraising ads work, can be found here.

© Andrew Papworth 2010